You see the headlines, plastered across every feed: “Quantum Supremacy Achieved!” A digital gold rush, promising unimagined futures. But before you start mapping your exit strategy from classical computing, let’s talk about what *actually* happens when the dust settles on a quantum supremacy experiment. I’m not talking about the vendor demos; I’m talking about the raw, unvarnished truth from the trenches where these machines are actually being wrestled into submission.

Verifying the Quantum Supremacy Experiment

The allure of “quantum supremacy” often hinges on demonstrating a quantum computer can solve a specific problem orders of magnitude faster than any classical supercomputer. It’s a tantalizing prospect, a glimpse into a future where complex computations currently beyond our wildest dreams become routine. But here’s the kicker: the very act of claiming supremacy is, in itself, a classical computation. You need a robust classical framework to verify the quantum output, to ensure the quantum processor didn’t just sneeze out random bits.

The Quantum Supremacy Experiment: Designing and Verifying

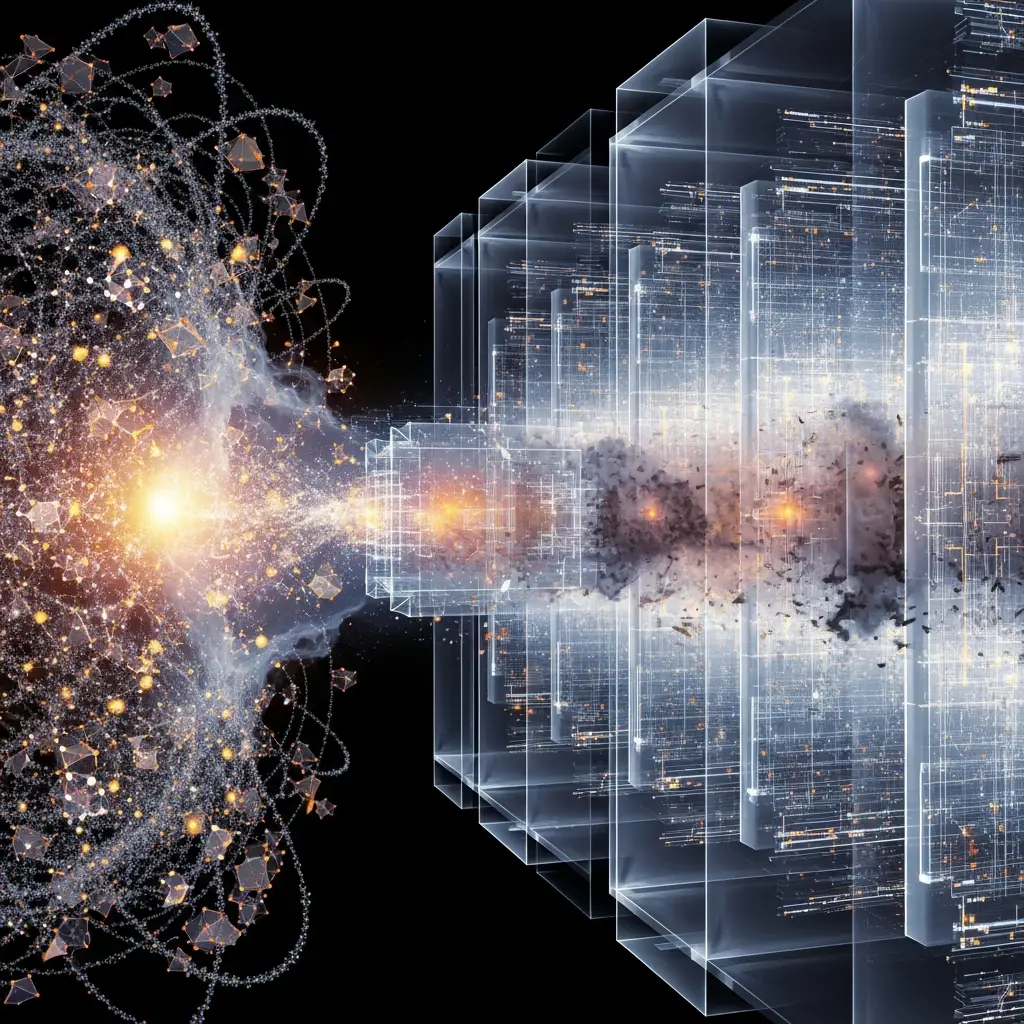

Consider the very nature of a quantum supremacy experiment. It’s designed to be *just* beyond the reach of even the most powerful classical machines, a meticulously chosen problem that exploits quantum phenomena like superposition and entanglement to an extreme degree. The quantum proposal is elegant, a symphony of qubits dancing in probabilistic harmony. The classical disposal, however, is the brute-force verification, a massive computational effort to confirm that yes, the quantum system indeed produced the expected, statistically improbable outcome.

Quantum Supremacy Experiment Design and Measurement Logic

This is why the “Quantum Proposes, Classical Disposes” decision logic is so crucial for setting new benchmarks. Instead of passively accepting the quantum output, we can actively design our classical verification *as part of the quantum program itself*. This isn’t about conventional data cleaning; it’s about embedding measurement discipline and statistical analysis directly into the quantum circuit’s operational logic. It means treating “orphan measurement exclusion” not as an afterthought, but as a first-class citizen of the quantum computation, woven into the very fabric of the circuit layout and readout mapping.

Quantum Supremacy Experiment: Proofs of Concept on Noisy Hardware

The ultimate test of this approach lies in tackling problems that are genuinely hard for classical computers, like instances of the Elliptic Curve Discrete Logarithm Problem (ECDLP). Standard resource estimates often assume pristine quantum hardware and flat, unoptimized circuits. However, by employing our “Quantum Proposes, Classical Disposes” framework—leveraging noise-robust Shor-style constructions, mapping group operations onto error-mitigated geometric patterns, and wrapping everything in a V5-style measurement discipline—we can demonstrate non-trivial ECDLP instances on current hardware that *appear* to be beyond reach under those conventional assumptions. The future isn’t waiting for quantum; quantum is being built, piece by noisy, error-mitigated piece, on the hardware we have today.

For More Check Out