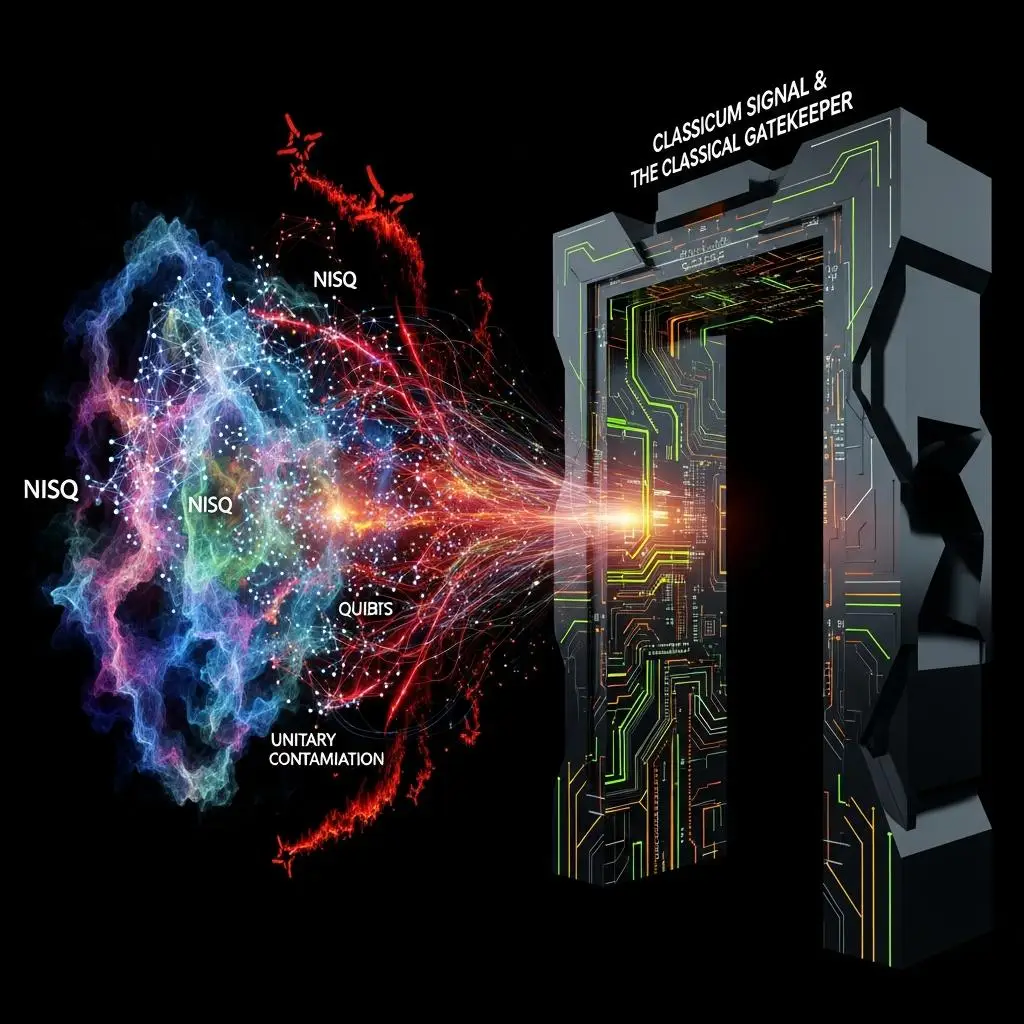

It sounds like we’re on the cusp of some world-altering event. But here’s the thing most folks miss: the real story isn’t about the quantum computer “winning” a race. It’s about how the classical systems *behind* the quantum experiment ultimately make the decision. I recently dug into a particular quantum supremacy experiment, and what I found wasn’t a definitive “quantum is king” moment, but rather a complex dance where classical computation dictated the terms of victory, or more often, the terms of defeat.

Beyond Quantum Supremacy: The Practical Hurdles of Project Chimera

The real question for us—the ones actually trying to get useful work out of these things—isn’t “Can a quantum computer do X?” It’s “Can a quantum computer do X *within the constraints of the classical system managing it*, and can we make it do so reliably enough to generate *publishable* results?” Consider a recent quantum supremacy experiment, and let’s just call it “Project Chimera” for now.

Verifying the Quantum Supremacy Experiment

The paper, of course, talks about the “quantum advantage” achieved. What it glosses over is the *classical algorithms* used to verify this advantage. They ran sophisticated classical Monte Carlo methods, not to simulate the quantum circuit directly (which is the computationally expensive part they claim to avoid), but to *post-process* the quantum output and infer the target distribution. This classical verification step is where the decision logic truly resides.

Assessing the Quantum Supremacy Experiment’s Classical Reliance

My take on this particular quantum supremacy experiment? The quantum hardware did its part, generating a probability distribution. But the *real* hero, or perhaps the ultimate gatekeeper, was the classical inference engine. It’s what took the raw, noisy bitstrings and said, “Yes, this pattern aligns with what we expect from an advantage.” If that classical post-processing bottleneck can be solved efficiently, then the quantum advantage is diluted, or at least, significantly reframed.

Navigating the Quantum Supremacy Experiment’s Classical Interplay

So, as you design your next experiments, push your quantum circuits. See what you can extract. But don’t forget to scrutinize the classical backend. Because in the end, it’s the classical system that signs off on your quantum findings. It’s the classical system that dictates whether your novel quantum circuit is a true benchmark or just a well-managed data collection exercise for a classical algorithm. The real frontier is understanding this interplay, and extracting signal even when the classical system is primed to dispose of it. We’re seeing opportunities to perform complex cryptographic tasks on NISQ hardware *today* by treating noise as a signal, not just an error. The question for your risk assessment: is your 3-5 year timeline for quantum adoption still valid when these “co-dependent” demonstrations are already happening?

For More Check Out