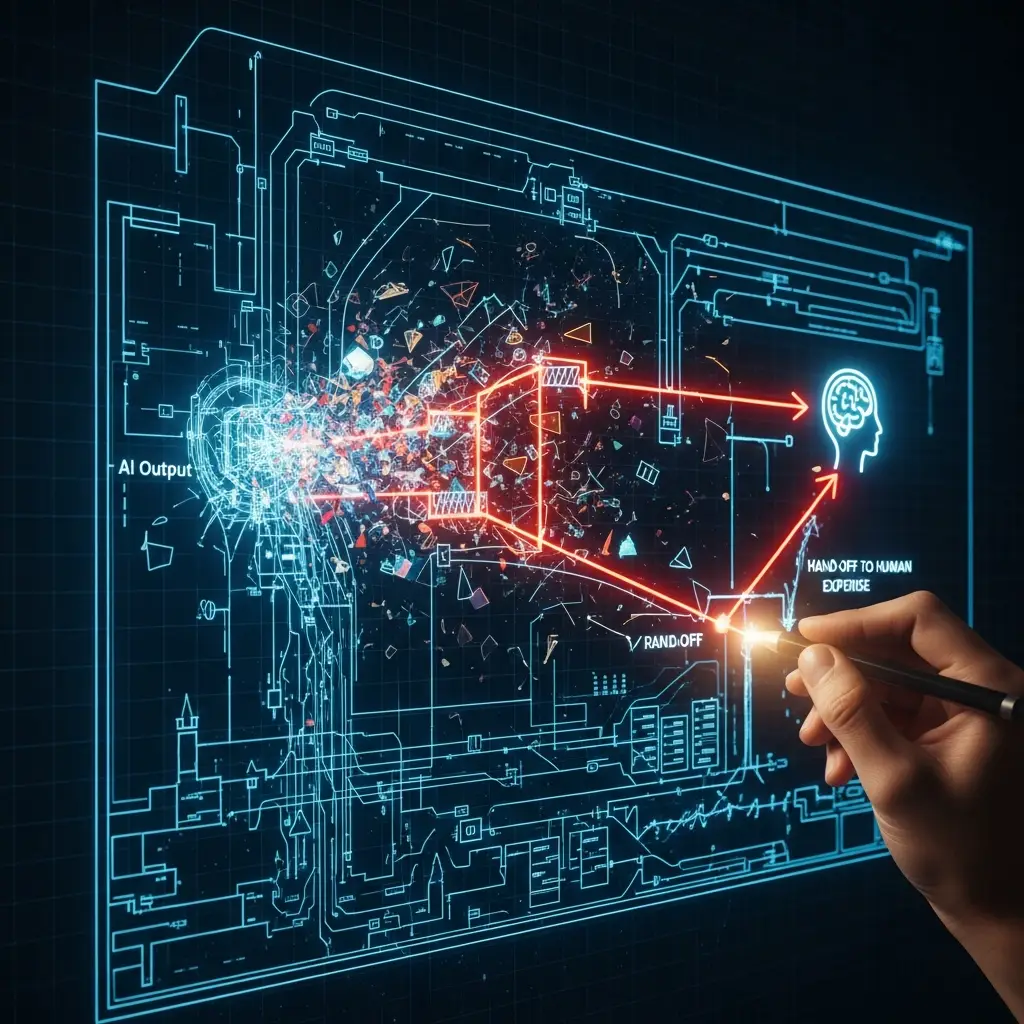

The machine spits out gibberish again. It’s not just a glitch; it’s a symptom of deeper systemic decay. The real problem isn’t the AI; it’s the lack of robust, formal Edge-Case Escalation protocols that would have dictated precisely when and how the system should hand off critical decisions to human expertise, preventing catastrophic “System Drift” before it even begins.

Understanding CRM AI Hallucinations: Causes and Prevention

Many of the AI tools you’re currently using are essentially souped-up spreadsheets with fancy chatbots attached. They promise the moon, but when faced with the messy, nuanced reality of your business, they start spewing nonsense. The core issue—why AI hallucinations occur in brittle CRM automations and how to prevent them—boils down to the absence of a structured mechanism to govern the AI’s boundaries.

Why Brittle CRM Automations Hallucinate and How to Prevent It

Think of your AI assistant not as an autonomous genius, but as an incredibly fast, sometimes reckless intern. When it encounters a situation outside its programmed parameters – a customer query with unusual phrasing, a sales lead with inconsistent data, or a request requiring nuanced judgment – it doesn’t know to ask for help. Instead, it improvises, often with disastrous results. This improvisation is what we perceive as hallucination, and it’s the most visible sign of System Drift.

Understanding Why Brittle CRM Automations Hallucinate and How to Prevent It

The solution isn’t to buy another AI tool that promises to “fix” hallucinations with more jargon. It’s about implementing formal Edge-Case Escalation protocols. These protocols act as guardrails, ensuring the AI operates within its capabilities and doesn’t wander into territory where its lack of genuine understanding leads to errors. This disciplined approach to AI integration is the industrial blueprint for founders who understand that true efficiency comes from intelligent system design, not just faster processing.

The “Why” and “How” of Preventing CRM AI Hallucinations

When you ask “why AI hallucinations occur in brittle CRM automations and how to prevent them,” the answer is clear: they occur because the systems are designed to fail when confronted with reality, and they are prevented by building in deliberate points of human intervention. Treat your AI’s limitations not as a flaw in the technology, but as an opportunity to design smarter, more resilient business processes. This is how you move from chasing AI’s tail to building systems that reliably serve your business.

For More Check Out